Primal dual relationships are often at the heart of ideas in optimization and in applications of linear algebra. From advanced lagrangian duals to linear programming methods - all of them draw inspiration from the simple idea of duality.

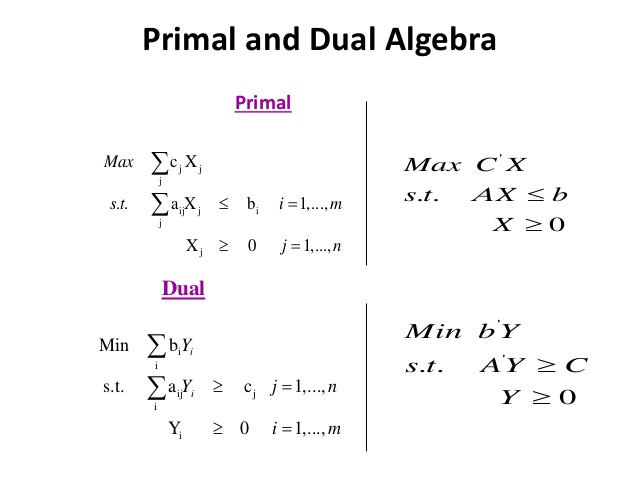

Often the idea is quite misunderstood. The underlying simplicity is marred by complex notation and rigor. You are likely to get introduced to primal dual relationship in linear programming as following, in most textbooks:

Fig1: Primal and dual of a linear programming problem

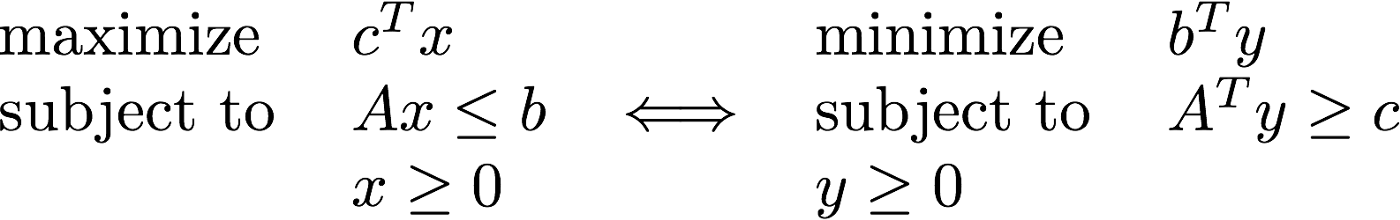

There is a more condensed representation of the above using linear algebra and matrix notation. However, it still doesn’t throw any light on the essence and motivation behind the primal and dual relationship.

Fig2: The condensed matrix notation

After giving the primal and dual relationship in linear programming a read, I decided to take a bite at explaining it. I hope this interpretation of duality will allow you to get a more intuitive understanding of it. Hopefully, helping the reader on their quest to apply this first principle whenever they encounter duality in much more complex and advanced scenarios. Comments from the learned community and those who are familiar with the topic are genuinely appreciated!

Okay, firstly lets brush up what a typical linear programming problem looks like:

Linear Programming Review

Let us suppose that during this lockdown you want to catch up on all your previous guilty pleasures and hobbies. In turn you wish to the maximize happiness and joy from all these activities. However, certain physical restrictions may still apply! For example, one may not be able to continue an activity or combination of certain activities for an extended period because you still don’t have sufficient time or the activities can be exhausting. Given these realistic constraints, you wish to maximize the happiness that can be achieved. This can be formulated as a linear programming problem!

To not make our example complex, let’s say you like to engage in two activities: dancing and painting.

Let the number of hours you paint and dance in a day be x1 and x2 resp.

Let’s say the happiness is some function of these two activities H(x1, x2) = 6x1+5x2.

The happiness is maximized subject to some constraints.

If you cannot give more than five hours a day to any leisure, it translates to, x1 + x2 <= 5

Given the rate of energy each activity consumes and given you cannot spend more than 12 units of energy, translates to 3x1 + 4x2 <= 12

Since you cannot do any activity for negative hours both x1, x2 have to be non negative x1, x2 >= 0

Then the typical linear program can look like:

maximize H(x1, x2) = 6x1 + 5x2

subject to:

x1 + x2 <= 5

3x1 + 4x2 <= 12

x1, x2 >= 0

The primal problem

Now, this is easy. The original optimization problem is assumed to be a primal. So the primal is the same as the linear program:

maximize H(x1, x2) = 6x1 + 5x2

subject to:

x1 + x2 <= 5

3x1 + 2x2 <= 12

x1, x2 >= 0

How should we begin to approach such a problem analytically if we are not interested in the graphical method to solve linear programs? Let’s suppose there are no conditions on the objective

maximize H(x1, x2) = 6x1 + 5x2

Then, the maximum value this objective can achieve is +∞. This sort of gives us an upper bound to the maximum value the objective can take. Although a lousy one, I agree. However, can we do better? Is it possible to reduce or minimize this lousy upper bound? The answer turns out to be yes!

If you notice the second condition, 3x1 + 2x2 <= 12 .

The trick is to multiply this equation by 3 . Which results in 9x1 + 6x2 <= 36 and for non-negative variables i.e. x1, x2 >= 0. If the coefficients of these variables in the inequality is greater than the coefficients of corresponding variables in the objective then the right hand side acts as the upper bound.

H(x1, x2) = 6x1 + 5x2 <= 9x1 + 6x2 <=36

So, 36 is the new upper bound for H(x1, x2). Can we do even better? Yes! Let’s look at the first constraint and multiply it by 6. In turn we get 6x1 + 6x2 <=30. So the latest upper bound is 30. 30 is lower than 36 as an upper bound. In general sense, it is better since it is much more tight upper bound to our maximization problem.

Last time, can we do even better? Let’s use both the constraints at once. If we multiply first equation by 3 and second equation by 1 and add both of them.

3x1 + 3x2 <= 15

+ 3x1 + 2x2 <= 12

6x1 + 5x2 <= 27

so, H(x1, x2) = 6x1+5x2 <= 27

We have finally been able to get a very tight upper bound on our maximization problem i.e. 27. Notice while we were supposed to solve a maximization problem in our approach, we used mimization to solve maximization! Thanos much?. The minimization problem we solved is called the dual to the maximization primal!

The Dual problem

While we are almost there, for sake of completeness let’s put our ideas together in a mathematical framework. Suppose to get the tighest upper bound to the maximum, you want to multiply constraint by non-negative numbers. First one by y1 and second constraint by y2 ; add them and compare it to the H(x1,x2). Now,

y1*(x1 + x2) <= 5y1

+y2*(3x1 + 2x2) <= 12y2

(y1+3y2)*x1 + (y1+2y2)*x2 <= 5y1 + 12y2 (A)

6*x1 + 5*x2 = H(x1, x2)

The coefficients x1, x2 of in (A) and in H(x1, x2) have to be compared to justify that RHS of (A) will give upper bound on our objective.

So, eventually we compare the above expression with H(x1, x2). The corresponding coefficients of x1 and x2 must be greater than equal to the coefficients of x1, x2 in H(x1, x2) for it to give the upper bound on the primal. And then the sum right hand side of the constraints becomes the upper bound.

Our equivalent objective would be to minimize that upper bound so the equivalent dual would be:

minimize D(y1, y2) = 5y1 + 12y2

+y1 + 3y2 >= 6

y1 + 2y2 >= 5

y1, y2 >= 0

Takeaways!

Every problem can be thought of as a primal or dual problem. The conjugate of the primal is the dual and vice versa. In essence, a dual of maximization problem can be thought of as trying to get a tight bound for the maximum i.e. trying to minimize the upper bound from infinity to the maximum, slowly. Which becomes a minimization problem. Similarly for a minimization primal the dual would be to approach it as maximizing the lower bound to achieve tight lower bounds!

Needless to say, careful readers may wonder as we make the bounds increasingly tighter, does the bound ever equal to the answer of the original problem? The answer is yes and that’s what is called weak duality. Which is left to future discussion!